[1]about the onboard oxygen generation system seems to be the cause or is at least one of the possible causes to rule out. This has been an issue with fighter jets for some time now and has been investigated before. It doesn’t take a rocket scientist (pun intended) to realize that oxygen to the pilot should be a huge priority and even have a back up system in case of an emergency.

It will take time and careful investigation to determine the root cause. In the end, we will most likely need the wreckage to determine what actually caused the jet to crash. Regardless, we know the result and it is not hard to imagine that every F35 pilot is going to pause and consider the possibility going forward not to mention the governments that will do the same. Even though what we do in the military is dangerous by design, we owe it to all of us to get better at applying advanced technologies by examining all of our assumptions.

Unfortunately, this type of design problem seems to be a systemic problem with the way we implement advanced technologies. The real question going forward with advanced technology isn’t if the technology is the right thing to do, it is can we make it safe and manage the risks. As we apply technology to virtually everything we encounter in our lives, we need to know that the engineers and companies building these things are using a repeatable and reliable process to analyze everything that could go wrong and that they have designed out those risks.

Back to the F35, I am sure the militaries and governments involved with the development spent serious time and money in analyzing every bolt and circuit. I also know that space and weight are significant challenges that must be taken into consideration.

As we continue to advance technology and use more sophisticated control and monitoring systems, we are also creating the possibility that our technological advancements could backfire and increase our risks. Just by adding networking and microprocessors, we run the risk of losing control of our creation to a mistake in code, a bad upload or firmware update, or a hack.

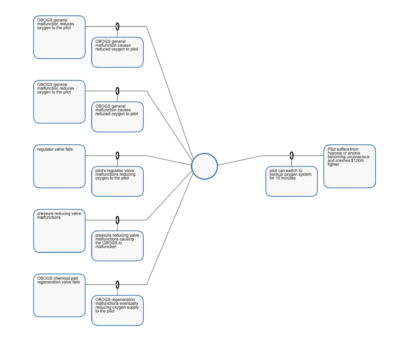

Considering the basic risk equation where risk is equal to likelihood multiplied by consequence, we look for ways to reduce likelihood and consequence to minimize risk.

In the F35, a scenario for consideration would be the pilot losing consciousness. Another might be a pilot losing control. Let’s look at both.

If the pilot loses control, the possible consequence might be a crash. A crash is bad, and we should try to avoid it. Since we cannot reduce the consequence, we are going to look for ways to reduce the likelihood of that consequence occurring. I know that pilots get a lot of training on how to regain control and we have probably built into many aircraft the ability for the computers to manage flight surfaces to help the pilot regain control, so the consequence doesn’t occur. Both of these efforts reduce likelihood thereby reducing in the risk.

In the case where the pilot loses consciousness, is there something we could do to reduce the risk associated with an incapacitated pilot? Looking at reducing likelihood, we might consider using a an alarm to warn the pilot that oxygen in the air breathed is below acceptable limits or contaminated. That might give the pilot time to get the plane low enough to breathe outside air if there was a way to get fresh air into the cabin. My military experience involved submarines, so we had to surface to get fresh air in the cabin so to speak.

Maybe a better approach considering this is a fighting asset would be an alternate system like the ejection seat’s O2tank to provide oxygen so the pilot could continue the mission in event the onboard oxygen generation system malfunctions. This too would serve to reduce the likelihood that the scenario would create the crash consequence. However, this takes room and weight and that is at a premium in this situation.

The news stated that both governments plan to find and recover the crashed jet. Additionally, the news stated long before the crash that a significant lab at Wright-Patterson AFB was developed to test the onboard oxygen generation system[2]. This is noble and an expected response to this type of problem. They will likely spend a lot more money and time to investigate and test the onboard oxygen generation system in an effort to reduce the likelihood that they malfunction.

But what if the industry is too close to the problem and looking at it wrong?

Years ago, when computers were just starting to be a thing you could purchase for a couple thousand dollars, I received a call from an engineer at a steel mill that had implemented the software my company was creating to run industrial machines. He stated, “The software isn’t working right; you should come see for yourself.” On arrival, I was escorted to the plant where a large gantry crane was moving a ladle of liquid metal overhead. Even though I used to operate a nuclear power plant on a submarine, I really dislike molten metal. Anyway, the engineer showed me how the software had been configured to control the crane. As the crane moved along, the engineer was clicking on the stop button with a wired mouse (no wireless or touchscreens were available yet) and complaining that the software wasn’t working right. As the ladle neared the end of travel, and my heart climbed further up into my throat, he pulled the plug on the computer to stop it. I asked where the E-Stop button was, and he said it was on the computer screen we were just using before he pulled the plug. We had a long discussion about how the Emergency-Stop had to operate in every possible situation to disable power to the device and that my no means could it be in a computer program.

Years ago, when computers were just starting to be a thing you could purchase for a couple thousand dollars, I received a call from an engineer at a steel mill that had implemented the software my company was creating to run industrial machines. He stated, “The software isn’t working right; you should come see for yourself.” On arrival, I was escorted to the plant where a large gantry crane was moving a ladle of liquid metal overhead. Even though I used to operate a nuclear power plant on a submarine, I really dislike molten metal. Anyway, the engineer showed me how the software had been configured to control the crane. As the crane moved along, the engineer was clicking on the stop button with a wired mouse (no wireless or touchscreens were available yet) and complaining that the software wasn’t working right. As the ladle neared the end of travel, and my heart climbed further up into my throat, he pulled the plug on the computer to stop it. I asked where the E-Stop button was, and he said it was on the computer screen we were just using before he pulled the plug. We had a long discussion about how the Emergency-Stop had to operate in every possible situation to disable power to the device and that my no means could it be in a computer program.

But that is the problem today. We are not putting in the E-Stop to shut the computer off or override the system in an emergency that the programmer didn’t think of or the hacker created.

The F35 has already had problems with onboard O2generation system that caused an upgrade to the firmware[3]. While there are significant efforts taken in firmware programming for critical systems, mistakes happen and upgrading firmware or other software in any system creates an opportunity for the transfer of the wrong code or bad code including possible malware.

In process safety, we assemble all the pertinent documents and appropriate personnel into a working group to perform a process hazards analysis, hazards and operability study, or other similar study. We discuss every possible scenario that could generate a consequence in detail to determine if the risk of the consequence has adequate layers of protection to prevent it from occurring.

Today, you can extend the study to include analyzing to see if the scenario can be created by a hacker or malware and if the layers of protection are also vulnerable to a hacker or malware using a Security PHA Review. You can even extend the analysis to include just a code problem like uploading new firmware that results in a malfunction.

I can only hope there is a working group of pilots and technical experts working through some type of hazards and operability study too. Yes, I am aware that flying a fighter jet and getting shot at is dangerous, just like operating a nuclear power plant in a submarine that sinks in the ocean is dangerous. But, the working group analyze all of the scenarios where risk is greatest for a significant consequence and suggest areas where extra effort should be spent to reduce likelihood?

Likewise, I hope someone will start adding similar efforts to advanced technology for systems like commercial aircraft and driverless vehicles. We are designing cars now with advanced technology that will not let the operator make mistakes like opening the trunk while driving down the freeway. However, that same logic can work against us in an emergency by preventing us from turning off the car when it is out of control on the freeway. Since the automobile industry still doesn’t put seat belt cutters and devices to break the windows easily in cars, I suspect they will leave the E-Stop out of driverless cars too.

Want to know more, contact us and we will walk you through how to perform a Security PHA Review or any other type of risk analysis that focuses on reducing the likelihood of the consequence you fear most.

References:

[1]NIKKEI Asian Review, “F-35 crash shows problems still lurk behind stealth fighter Oxygen supply system raises questions as search for pilot and plane continues” by TETSURO KOSAKA, Nikkei senior staff writer APRIL 23, 2019 12:16 JST https://asia.nikkei.com/Spotlight/Comment/F-35-crash-shows-problems-still-lurk-behind-stealth-fighter

[2]Dayton Daily News, “Wright Patt researchers hunt for clues in F-35 stealth fighter issue” By Barrie Barber, July 26, 2017 https://www.daytondailynews.com/news/wright-patt-researchers-hunt-for-clues-stealth-fighter-issue/hhIGNUw1lnnUhpwUCz3ynI/

[3]The Register, “Breathless F-35 pilots to get oxygen boost via algorithm tweak” by Gareth Corfield, July 20, 2017 https://www.theregister.co.uk/2017/07/20/f35_oxygen_system_algorithm_tweak/